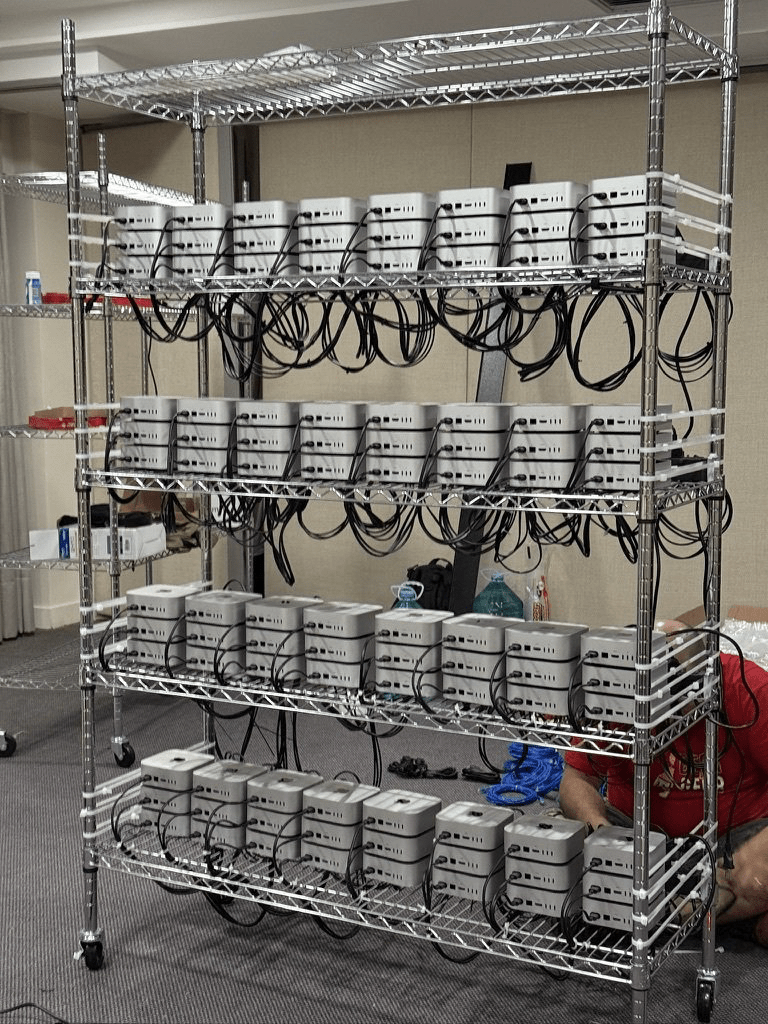

How open‑wire Mobile Wire Cluster Racks deliver cooler, denser, faster deployments than traditional 19‑inch cabinets

The DevOps bottleneck isn’t silicon, it’s furniture

Teams racing to add CI runners, GPU nodes, or edge servers usually hit the same wall: closed server cabinets cost thousands, arrive weeks late, and cook small‑form‑factor PCs.

An open‑frame wire rack solves all three problems at once:

-

Exhaust heat & hot spots: 360° airflow keeps intake temps in the safe 18–27 °C band without extra fans.

-

Long lead‑times for custom sleds: Off‑the‑shelf rack ships today and one person assembles it in under 15 minutes.

-

Limited floor space: A 48‑inch‑high unit parks up to 60 mini‑PCs (or GPUs) on just six square feet.

-

Scaling on‑site labs: Heavy‑duty casters let you roll an entire cluster between offices, events, or classrooms.

Why open‑wire shelving wins for modern workloads

-

Cooler hardware = faster jobs

Wire shelves act like built‑in plenum floors: hot air rises freely, so CPUs and GPUs seldom throttle even under sustained parallel compiles or inference runs. Intake temperatures remain only a few degrees above ambient. -

Denser capacity without proprietary trays

A single rack swallows everything from Intel NUCs and Dell Micros to Jetson Orins and RTX‑powered SFF rigs, no pricey one‑off sleds required. -

True plug‑and‑play mobility

Four industrial casters and 600 lb‑per‑shelf ratings mean you can cable the farm in a staging area, wheel it to the IDF closet, or demo it at a trade show, no forklift, no raised floor. -

Built‑in cable‑management grid

The ⅜‑inch wire lattice works like a lacing bar every inch. A roll of Velcro or zip‑ties is all you need to keep data, power, and sensor leads neat.

Real‑world deployments you can replicate

-

CI/CD build farms: 30–100 mini‑PCs drive parallel iOS, Android, and web builds at a cost of just a few dollars per node compared with cloud runners.

-

Edge‑AI inference labs: Jetson or Intel ARC rigs stay below 70 °C while running 24‑hour YOLOv8 inference loops thanks to unrestricted airflow.

-

University hackathons: Mixed single‑board computers and eGPU boxes roll off a pallet Friday afternoon and are ready for students by dinner.

-

Render / transcode queues: Mac Studio or RTX 4090 SFF systems stay quiet enough for edit bays, and the entire farm slides behind a sound baffle when needed.

Configuration tips for peak efficacy

-

Shelf spacing: Leave 6–7 inches above the tallest chassis, then verify vertical draft with a smoke pen.

-

Power strips: Mount low‑profile PDUs on the rear uprights to keep cords short and tidy.

-

Thermal monitoring: Zip‑tie three USB probes (bottom, middle, top) and alert at 30 °C intake, cheap insurance against a tripped HVAC.

-

Color‑code cables: Blue for data, black for power; midnight swap‑outs become painless.

Ready to roll your own cluster?

Our Mobile Wire Cluster Rack ships flat‑packed with:

-

Five chrome wire shelves (600 lb each) - you choose the size you want.

-

Four locking 5‑inch industrial casters

Stop waiting on back‑ordered cabinets, deploy 60 nodes this weekend for a fraction of the cost. Explore specs, customer builds, and live temperature graphs on the product page, or chat with our solutions team for a free sizing worksheet.

Industrial4Less has supplied open‑wire cluster racks to DevOps teams, VFX studios, and university AI labs across North America. Join them and turn any room into a high‑density compute lab, no raised floor required.